Parliament on Wednesday (9 Nov) passed a new law, the Online Safety (Miscellaneous Amendments) Bill that will enhance online safety and require social media platforms to take down or block egregious content. Should the social media site refuse to comply, Infocomm Media Development Authority can issue a directive to internet service providers to block the access.

The Bill seeks to provide a safe online environment for Singapore, deter objectionable online activity and prevent access to harmful content. It also puts an adequate priority on the protection of different age-group children from exposure to content which may be harmful to them.

Such harmful content includes suicide, self-harm, sexual violence, sexual exploitation of a child, terrorism, and content that poses health risks or provokes race or religious incitement. Any social media sites that do not comply could potentially see a fine not exceeding $1 million or have their services blocked in Singapore.

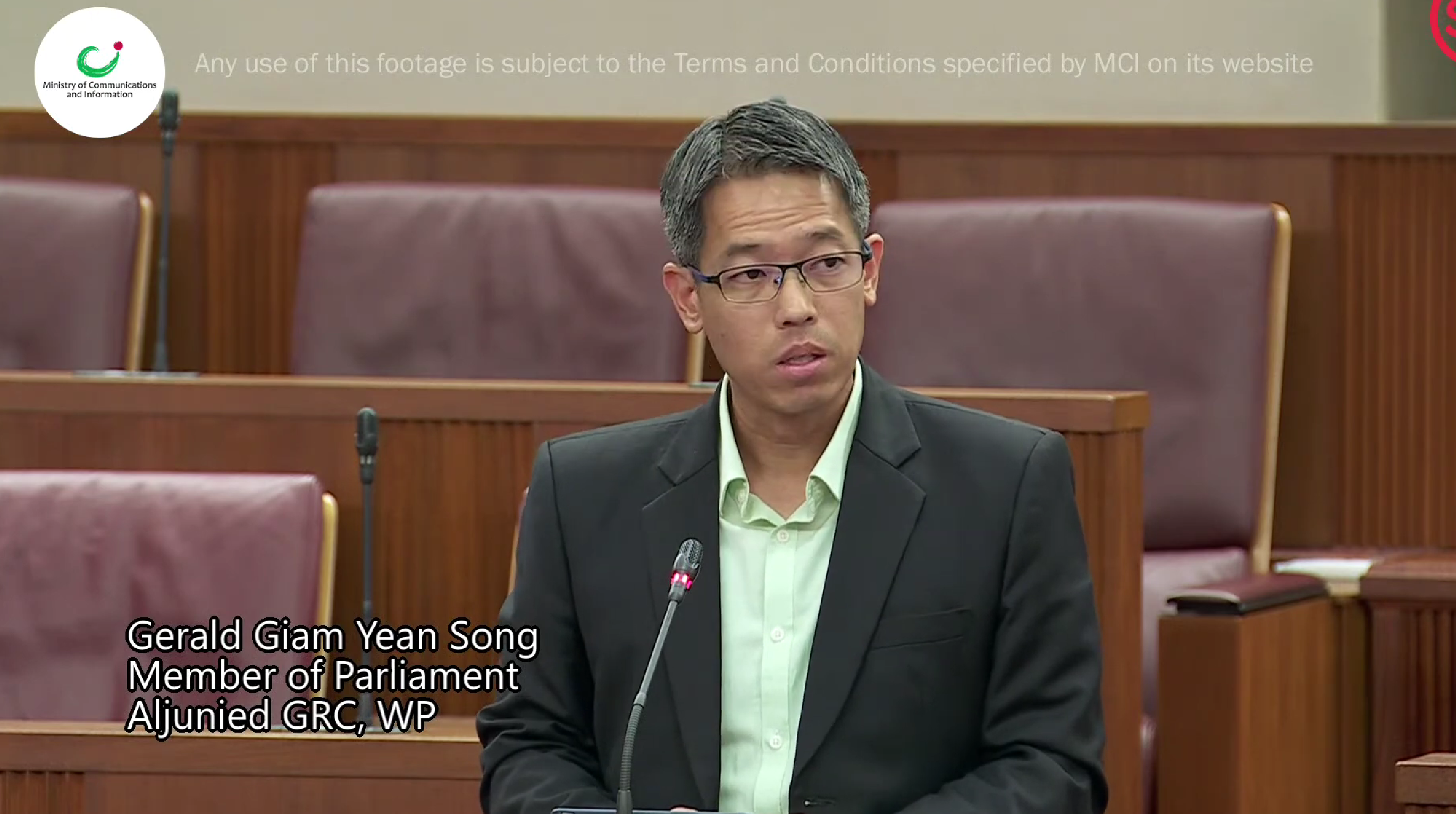

Before the Bill was passed, Workers’ Party member of parliament Gerald Giam asked how this new law ensures that the young ones are protected from the dangers of unfiltered content on the internet, given that there are no age verification mechanisms in place to prevent them from accessing this harmful content.

“Access to digital communications is not optional in this day and age, even for younger children. For example, if a ten-year-old child takes public transport on his own to and fro from school, his parents would want him to contact them in case of an emergency or track his location. In most cases this can only be done with a mobile phone or a smartwatch,” said Giam during the second reading of the Bill on Tuesday.

“However, it would be unwise to give that same unfiltered access to the internet on his phone. Currently, parents can install a parental control app on their child’s phone. This app will allow parents to restrict content, approve apps, set screen times and filter harmful content. It can also locate the child using gps.”

“Attempts at imposing age verification have previously failed in the United Kingdom’s implementation of a Digital Economy Act of 2017, in part because of privacy concerns. Separately, a young user can circumvent age restrictions by declaring his age to be 18 when in fact he is only 12.”

The Aljunied GRC MP went on to acknowledge that the age verification processes might prove to be a challenge and that it would be better if internet service providers on their own were to block harmful content at the network level by default.

“Rather than to expect parents to set up complicated filtering software on their children’s devices. This remote filtering should be activated by default for all new mobile and broadband subscriptions and offered for free for all subscribers. This will ensure that children of less tech-savvy parents will be protected by default. Adults that need full access to the internet should be able to opt-out of the filtering service without any charge,” explained Giam.

The WP MP also suggested that social media services be required to submit monthly reports, listing the types of harmful or inappropriate content or behaviours that have been flagged by users. This is so that the authorities can keep apprised of the latest trends. He also asked what measures are in place to ensure that enforcement powers are not abused, and what protections there will be for democratic freedoms.